Welcome to Multimedia Signal Processing Lab!

Our current research areas include:

- Video coding - next generation video coding, transcoding

- Machine learning, multi-modal signal processing, surveillance, health care

- Computer vision, 3D reconstruction and 3D video processing

We are located on the third floor of the Electrical Engineering building (EEB) in room 337.

Semantic Instance Annotation of Street Scenes by 3D to 2D Label Transfer

For research in autonomous cars and robotic applications, a street view video dataset with dense semantic labels will be very useful. Semantic labeling is vital for training models for object recognition, semantic segmentation, or scene understanding. We developed a street-view suburban video dataset, comprising over 400k images and a 3D to 2D label transfer method to obtain 2D ground truth labels.

Next Generation Video Coding

We proposed several techniques including Lagrange Multiplier adaptation, exploiting inter-frame dependency, and improved intra prediction, to achieve better compression performance over the state-of-the-art HEVC/H.265 video coding standard.

[Project Page]

Real-Time Gaze Estimation with a Kinect Camera

We developed a 3D gaze estimation system that can estimate the gaze direction using a Kinect camera. A 3D geometric eye model is constructed to determine the gaze direction. Experimental results indicate that the system can achieve an accuracy of 1.4~2.7 degree and is robust against large head movements. Two real-time applications (playing chess and typing with gaze) are implemented to demonstrate the potential for wide applications.

3D Object Modeling Using a Kinect Camera

We investigated the use of Kinect cameras for acquiring images of an object from multiple viewpoints and building a 3D model of the object. We developed methods to take care of the problems of noisy depth data, symmetrical objects, and with relatively few views. Simulations results show that accurate 3D models could be constructed.

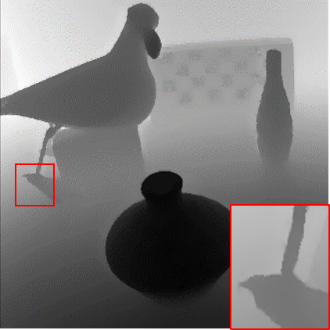

Single Depth Image Super-Resolution

We developed an algorithm for single depth image super resolution. The depth image is upscaled by a guided high resolution edge map, which is constructed from the edges in the low-resolution depth image via a Markov Random Field optimization in a patch synthesis based manner. Experimental results demonstrate the effectiveness of our method both visually and numerically compared to the state-of-art methods.

Activity Recognition

We propose a framework, consisting of several algorithms to recognize human activities that involve manipulating objects using a Kinect camera. Our algorithm identifies objects being manipulated and models high-level tasks being performed accordingly. We evaluate our approach on a challenging dataset of 12 kitchen tasks that involve 24 objects performed by 2 subjects. The entire system yields 82%/84% precision (74%/83%recall) for task/object recognition.

[Paper]